P2P-MPI's objectives

P2P-MPI aims to provide a means to execute parallel Java programs on a set of interconnected hosts (can be a cluster). Regarding parallelization, P2P-MPI provides an MPJ (MPI for Java) library to enable processes to communicate during execution. The originality of this implementation is its fault tolerance capability using replication (see demo). P2P-MPI provides also an original process launcher system. Like in a P2P file-sharing system, a user starting P2P-MPI accepts to share its CPU and joins the P2P network of available hosts. When the user requests an execution involving several hosts the system start searching for available and suitable resources owned by peers, copies the programs and data to selected hosts and starts the programs at every host.

Features

- Offers a message-passing programming model to the programmer.

- No need for per-OS binaries (execution of Java .class files).

- Self-configuration of platforms for programs executions (discovery of other grid nodes is done in a Peer-to-Peer organized group).

- Enables fault-tolerance for applications: the robustness of the execution platform (processes are transparently replicated as requested by users) is configurable by the user (ask for more or less replicas).

What is P2P-MPI ?

P2P-MPI is both an MPJ implementation, and a middleware for the management of computing resources. Similar to the idea of sharing files through a P2P system, P2P-MPI lets its users share their CPU and access others' CPUs. P2P-MPI is oriented to desktop grids: it is runnable as a simple user (no root privilege needed) and provides a transparent mechanism for fault-tolerance through replication of processes.

Illustrations

A small demo of fault tolerance using replication in P2P-MPI.

A parallelized scene rendering program running on 4 computers is started with a replication level of 2.

During execution, one host failure is simulated by killing all processes on that host.

We see that the rendering continues and finishes despite the failure.

What's New ?

Version 0.29.0 is released.

0.29.0 improves data management.

Larger files can be transmitted and a disk cache mechanism is provided

(see here).

0.28.2 is a bugfix releases of 0.28.0, and 0.27.2 is a backport to the single port version. From P2P-MPI 0.28.0, the communication layer has been completely rewritten to use the Java nio classes. The performances are far better but requires to be able to use a wider range of TCP ports. Hence, we call this implementation a multiple ports (MP) implementation. Previous versions required a single TCP port to better meet firewall policies requirements, and are called single port (SP) implementations. Next releases should offer both drivers in a single distribution.

Version 0.27.0 released.

P2P-MPI no longer needs JXTA.

JXTA was not fully in adequation with our needs so we decided to have our own, much simpler, peer-to-peer infrastructure, which is based on the principle of SuperNode (like e.g. Gnutella).

This was also an opportunity to embed network performance information about peers, and MPD now have a measure of network latency to reach each peer. In addition, a new service called the Reservation Service (RS) brings a better abstraction for host reservation.

Noticeable changes are induced by this replacement.

- a SuperNode *must* be started first and peers must have this SuperNode configured at boot time.

- some utilities change their names (e.g. runRDV becomes runSuperNode) and extra utilities appear (e.g. mpihost to list peers known locally). (see quick command guide : http://www.p2pmpi.org/documentation/quick.html)

- the runVisu utility has been reworked to accomodate the new framework, and now has the extra ability to use a proxy host (which caches SuperNode information) to keep the intrusive effects of visualization very low.

- Allocation strategy of p2pmpirun now makes use of network performance information: p2pmpirun selects peers with lowest latecy. Two variants of this strategy allow at runtime (see p2pmpirun -a) to concentrate processes on a reduced set of peers (multi-cores are targeted) or on the contrary to scatter processes on more peers (this may give more memory on the whole).

- Last, some properties have changed in P2P-MPI.conf, so you should browse it rapidly to see if it fits your needs.

Visualization

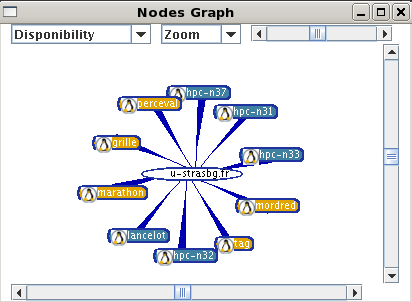

Enhanced monitoring tool: runVisu.

This tool provides a view of participating peers, their respective states:

are they currently available for a task, working on which task, or not responding.

The tool may also be used to see how MPI processes have been mapped

(which rank has been given to which computer).

Last, computer icons may be clicked to get detailed caracteristics about the resource

such as CPU freq, RAM available, system name, ... [This feature currently works for Linux, MacOSX and Solaris].

This tool provides a view of participating peers, their respective states:

are they currently available for a task, working on which task, or not responding.

The tool may also be used to see how MPI processes have been mapped

(which rank has been given to which computer).

Last, computer icons may be clicked to get detailed caracteristics about the resource

such as CPU freq, RAM available, system name, ... [This feature currently works for Linux, MacOSX and Solaris].